An Overlooked Solution For 'Virtual Event Fatigue'

There are countless articles, tweets, and LinkedIn posts from marketers and others expounding upon the future of virtual events and conferences; how ...

by Luis Cuenca Montes

Technical Lead, Client Services

Isolation. Travel restrictions. Same four walls.

Video and web conferencing only help so much to create meaningful human connection in our new COVID-induced normal and do little to feed our cultural appetites.

Imagine a web application that combines video conferencing technology and spatialized audio to create an immersive experience of togetherness in the physical world.

Ideally, this hypothetical application would have to provide:

With the StreetMeet experiment, we were able to achieve all of these goals by blending High Fidelity’s Spatial Audio with Twilio and Google Street View API to create a unique experience that allows you to meet friends, family, and colleagues anywhere. The result is the ability to see and listen to each other face to face while exploring an existing physical environment together.

StreetMeet is an application that shares a lot of similarities with any other Augmented Reality application out there, where a three-dimensional scene is projected on top of a real image or camera feed. In our case, we will be using a static panoramic image taken from a fixed point provided by Google Street View. Even though this allows us to use certain simplifications, ultimately it will end up imposing some constraints that we will have to deal with.

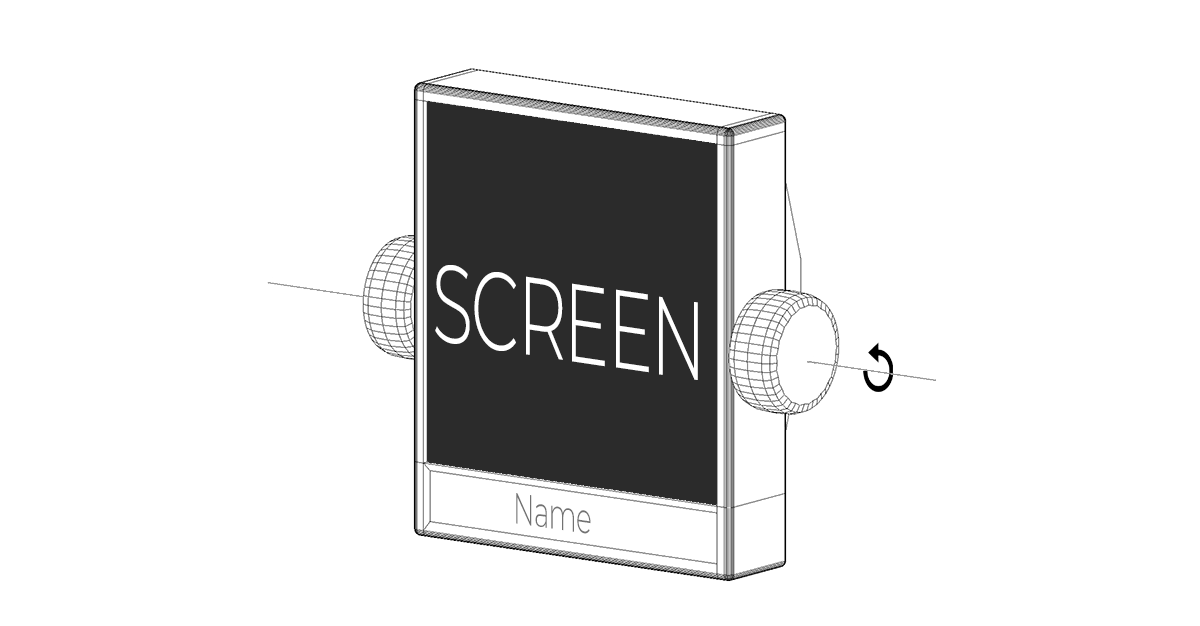

We need to create a way to represent all of the users that are connected to our 3D world with an extremely simple design but one that is able to transmit a unique personalized image of each user. Our design resembles a floating monitor pivoting around two anchor points on each side. The dimensions of the model have been chosen to guarantee an accurate perception of the avatar position and orientation from any other point on the scene.

We use Twilio to get other users’ webcam video feed and render them on the screen of the avatar model as a texture. Click here for a complete example of how to integrate Twilio and High Fidelity’s Spatial Audio API in your application.

We use Twilio to get other users’ webcam video feed and render them on the screen of the avatar model as a texture. Click here for a complete example of how to integrate Twilio and High Fidelity’s Spatial Audio API in your application.

With High Fidelity’s Spatial Audio API we will be able to send the microphone audio stream together with the avatar position and orientation, and receive the resulting spatialized audio together with all of the data (positions and orientations) necessary to render other connected avatars accurately on the 3d scene with Three.js. Click here for a full guide on how to use High Fidelity’s Spatial Audio API on your projects.

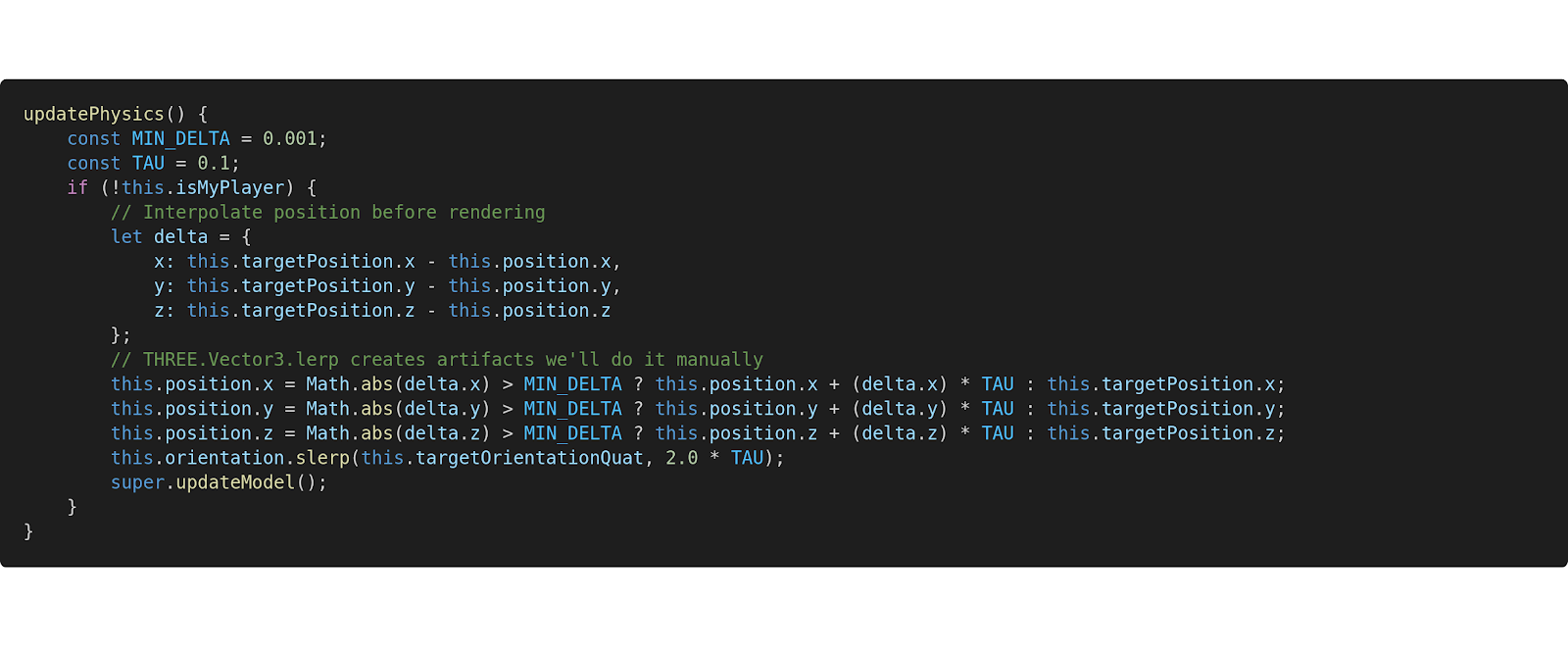

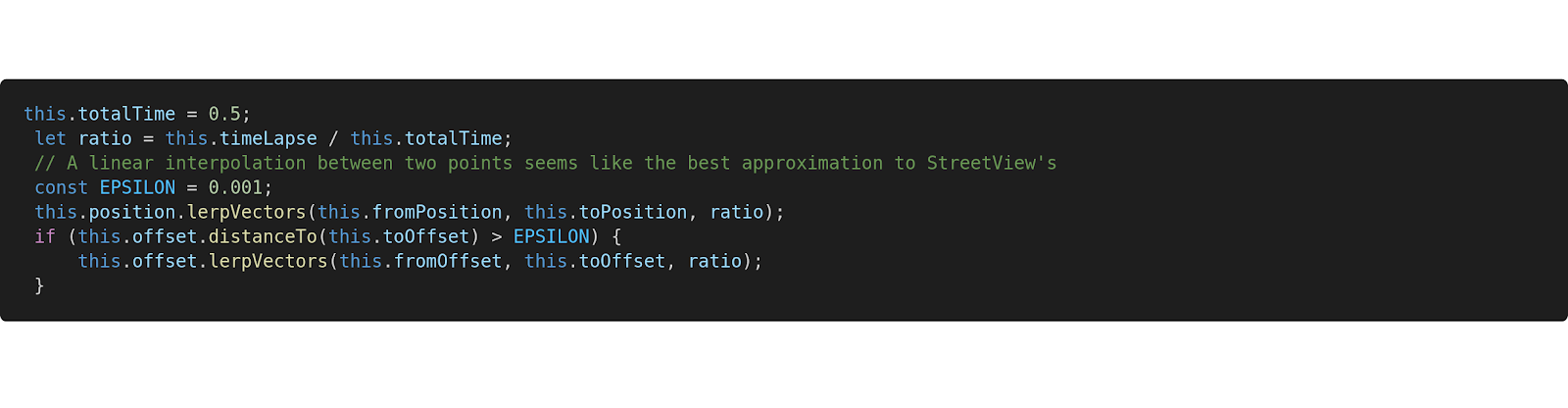

Since the frequency of the data received is lower than the frequency of our rendering loop (60Hz), we interpolate this data on the client in order to render smoother transitions between positions and orientations at every frame.

The name and color of each avatar is computed based on the ID that is assigned to them by the Spatial Audio API. Keep in mind that these IDs are generated randomly and will change when a new connection is established.

Google Street View API provides the tools to explore and visualize, via the web, 98% of the inhabited Earth (over 10 million miles) in the form of a series of panoramic images captured along multiple paths, including parks and the interior of monuments and museums.

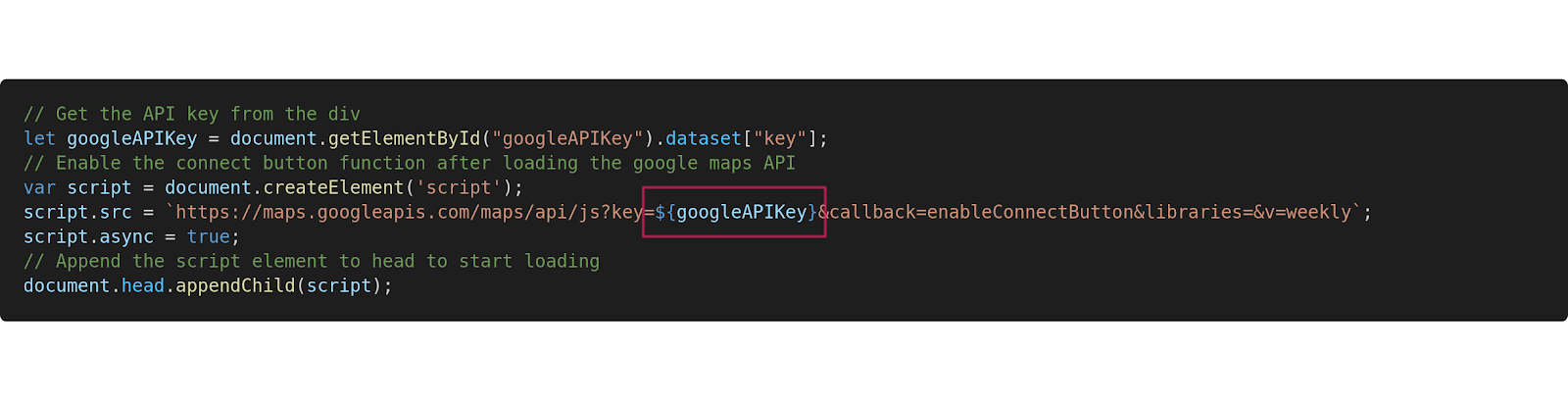

To use Google’s API, we should log in on Google's Cloud Console with a Gmail account, create a new application that makes use of the Street View API, and generate an API key for our application. Here's more info on how to set up in Google's Cloud Console.

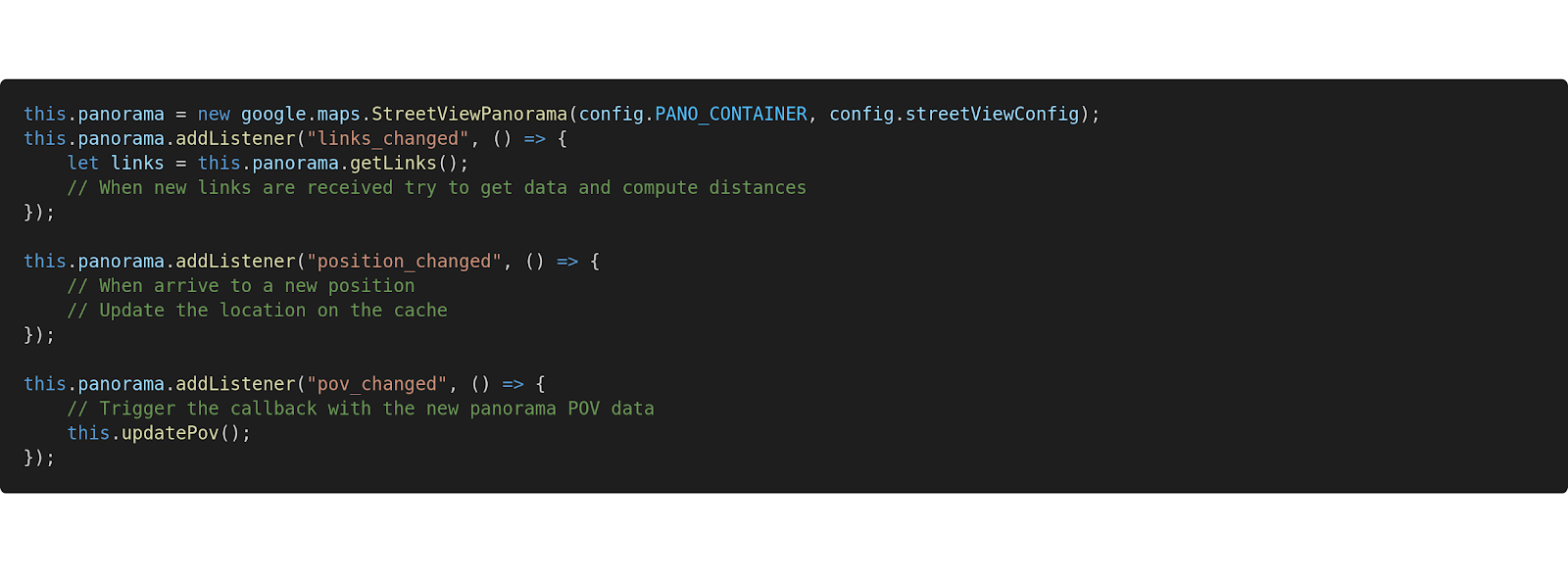

Google Street View API adds its own canvas and controls to the DOM. It also provides some useful callbacks that will help us draw our scene on top of their canvas, using a classic Augmented Reality implementation consisting of an overlapping WebGL canvas to render our avatars with Three.js.

Google Street View API adds its own canvas and controls to the DOM. It also provides some useful callbacks that will help us draw our scene on top of their canvas, using a classic Augmented Reality implementation consisting of an overlapping WebGL canvas to render our avatars with Three.js.

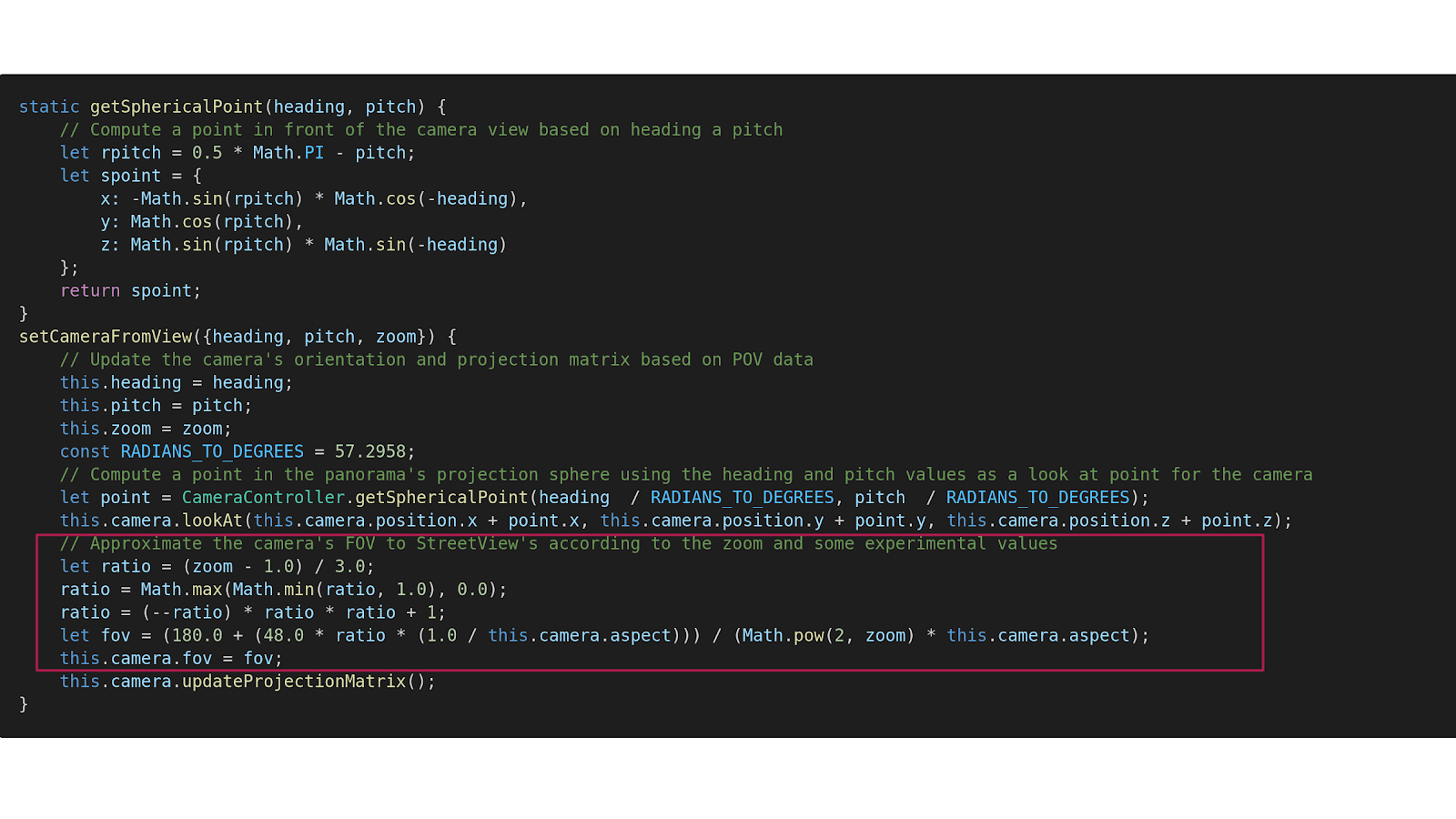

Now our main challenge is to try to maintain some degree of spatial coherence between both scenes. For this we need to estimate some parameters that are not explicitly exposed by Google’s API.

Now our main challenge is to try to maintain some degree of spatial coherence between both scenes. For this we need to estimate some parameters that are not explicitly exposed by Google’s API.

We need some extra information in order to properly synchronize the views from both canvases:

We can extract the panoramic camera rotation quaternion from the provided heading and pitch values. First we compute a point in space using heading and pitch and then we use it as a look at point for the Three Camera.

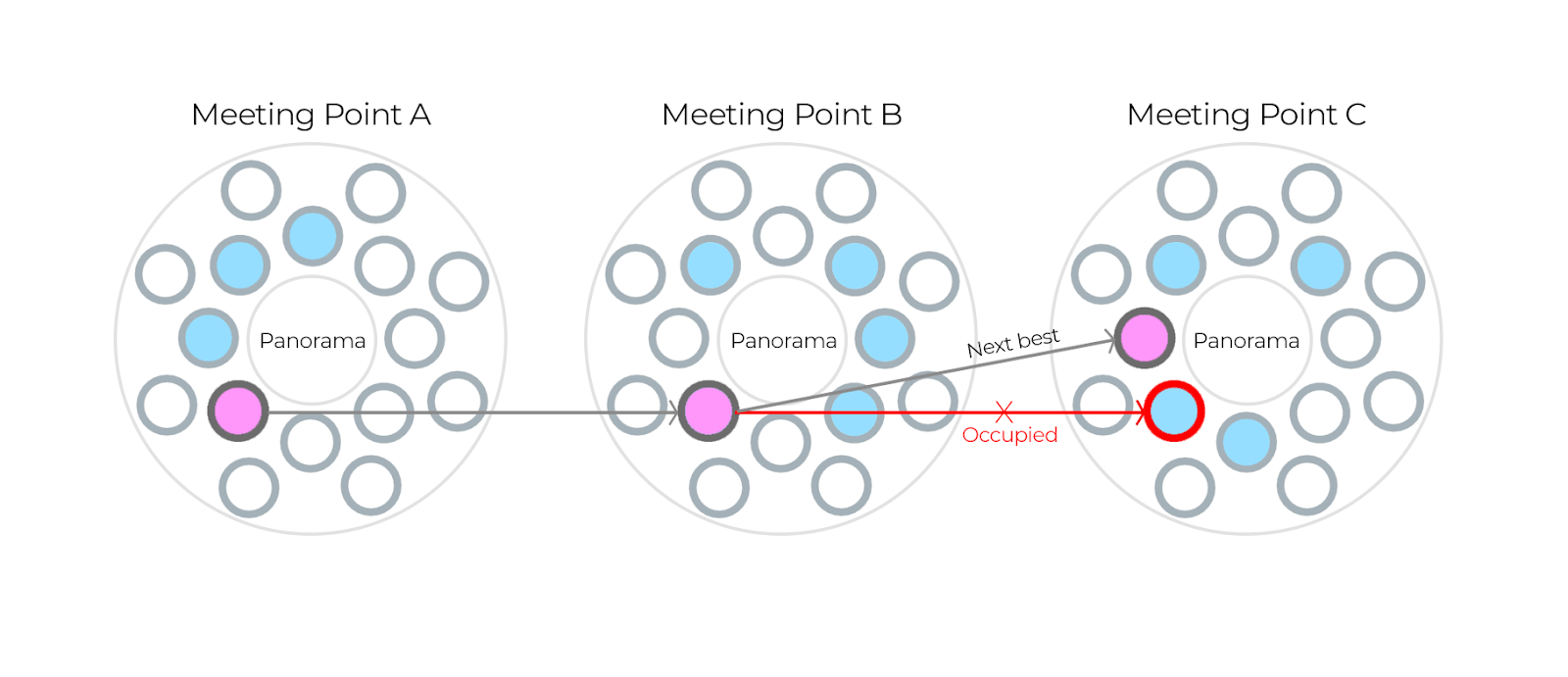

We can think of a meeting point as a cylindrical space with the center corresponding to the position of the panoramic camera, and where a specific number of users can congregate. We need to find a strategy to avoid all users ending up on the same point, since all of them will be using the same panorama as their background. We can limit the amount of positions that a user is allowed to occupy inside a meeting point. These positions are assigned according to these two rules:

Web technology is evolving to a point that allows developers to create, with a few lines of code, a compelling application that is one click away for most web users. With a little more effort, and by selecting and combining the best APIs out there, we can create a useful product that offers a completely different experience.

Spatial audio delivers an incredible, synchronous, and immersive experience. Recently, we paired High Fidelity’s Spatial Audio with Zoom and the results were amazing.

Getting started with High Fidelity’s Spatial Audio API has never been easier. Start by following our simple guide. From there, the guides page has additional documentation related to other applications built using our API. With just a few lines of simple code, you can create a high-quality, real-world audio experience for users.

Related Article:

by Ashleigh Harris

Chief Marketing Officer

There are countless articles, tweets, and LinkedIn posts from marketers and others expounding upon the future of virtual events and conferences; how ...

Subscribe now to be first to know what we're working on next.

By subscribing, you agree to the High Fidelity Terms of Service