Online Audio Spaces Update: New Features for Virtual Event Organizers

It’s been about 8 weeks since we launched High Fidelity’s new audio spaces in beta. We really appreciate all the support, particularly if you have ...

If you’re not familiar with the term AEC, it’s an acronym referring to the design, modeling and simulation in the Architecture, Engineering and Construction world. AEC has been, historically, one of the core drivers of innovation in virtual reality and 3D visualization. Recently, we learned about the AEC VR Hackathon in San Francisco at Microsoft Reactor, Jan 6–8, and the winning team who took Best Overall Project — with a High Fidelity based VR project! One of the team members, Mike Varner, was awesome enough to take the time to tell us a bit more about the event and the workflow they developed, which the team calls RhiFi.

I’d say there were about 50-ish people, maybe less, at the start of the event, and at least one of the teams bowed out before the end. Who was there consisted of a nice spread of architects, software engineers, and designers: a fairly diverse group. As with other hackathons I’ve been to, the environment was a friendly, welcoming, and collaborative one, with the scent of competition ever-present. Electrifying even.

We wanted to create a tool that simply allows for the import of architectural models into an virtual reality environment that supports multiple users over a local network and has built in, easy to use communication tools. High Fidelity just happened to be exactly what met those requirements as an off-the-shelf platform. As we dove deeper into our work, we realized that our pipe dream, namely a complete workflow cycle that will manage whatever changes you make to the model inside High Fidelity and can then be pushed back out to Rhinoceros, was actually possible. So with that in mind, we sallied forth.

Once the project is in a initial release state, the result should be a fairly seamless one that allows AEC teams the ability to review and edit their project in virtual reality in a smooth workflow that enhances their existing one. We feel this provides AEC teams with the opportunity to engage their subject matter and each other better over the course of the project, as well as helping to avoid the tyranny of the Friday meeting and Death by Powerpoint. There’s a certain amount of empathy and body language that is communicated when a person is able to stand within their collaborative creation, and High Fidelity does a surprisingly good job at capturing and relaying that with it’s avatar and facial feature system. Just as well, there’s a deeper connection and understanding human beings get by being able to spatially define something with their senses, rather than numbers and lines on a surface. Just as well, this offers teams that tend to be geographically separated over the course of a project the ability to meet ad-hoc, and still get as much, if not more, value than the standard Friday in-person meeting, surrounding a slide deck.

A dreamy, longer term pie-in-the-sky goal that we’d like to meet for the project would be for the workflow to interface with other 3D modeling programs (Revit, Blender, Maya, etc.), and allowing for team authoring and review of content in VR to become an integral part of all 3D model creator’s enhanced workflow, not just AEC.

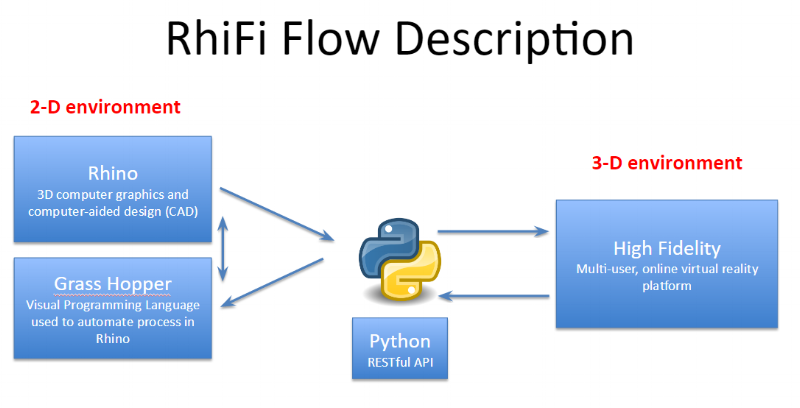

RhiFi is inherently a collaboration workflow. The basic rub is that a model is originally authored in Rhino, which is then pushed to an upload folder. On the High Fidelity end of things, JavaScript scripts are run that will then grab the model and associated data and import it into High Fidelity, then spawning it. The AEC team can then collaboratively explore the model, and using High Fidelity’s built in entity editing system , edit the model together in real time, immediately utilizing feedback from the team. In the current state of both the AEC and VR industry, both product wise and consumer adoption wise, it’s important to view this tool as an enhancement to an existing workflow, and not outright replacement.

Lots of scripting & a RESTful API. The four main components are:

— Rhinoceros, a 3D modeling program

— Grasshopper, a Visual Programming Language

— Python RESTful API

— High Fidelity, a virtual reality platform

A walk-through of one direction of the process would be:

1. Model is authored in Rhino

2. Model is redefined using Grasshopper via script, recording coordinate offsets

3. The model is uploaded to a designated upload folder

4. Scripts running on High Fidelity’s end make a request to the RESTful API for new models, and pulls them down if they are new, and then places them in the environment

5. The team can then review and edit their model to their liking

As with most technical things, this is best expressed with a picture:

At a high level, the system itself is fairly lightweight as all that is passed between Rhino and High Fidelity is JSON. The endpoints manage their uploads and downloads.

That is absolutely true. We feel that such a tool would be incredibly valuable to the not just the AEC community, but the VR community at large. We plan on shepherding the project as much as we can after the release, but we would very much like the community to be involved and contribute. We want this to be a tool that is built upon to help continue the normalization and leveraging of VR as a serious and useful platform.

A couple:

Understand when your idea should be an enhancement, rather than a replacement. Far too often new technologies are seen as the answer to replacing something, rather than enhancing it as it exists. The result is a compromise that showcases the new technology, but fails to do the task with at least as much efficiency as the previous workflow, but is ‘super cool!’. ‘Super Cool!’ creates initial buzz, but once that wears off, the user is left with whatever level of competence and maturity the design provides. So it’s important to not trade utility for gimmick, unless the gimmick actually brings something valuable to the table other than shallow pizazz.

In VR, user testing is absolutely necessary, so play test early and often, and listen to your users. They can give you feedback that your bias may conceal, and with VR those biases can be quite sneaky, given how much we detect and communicate completely subconsciously. Each person’s sensitivity levels vary, dramatically in some cases, and I’m not just talking about motion sickness.

Don’t make the user work for the experience any more than they should expect. There’s something I factor into my designs I call the *Ahem* BS Coefficient It’s a focused (and quite unscientific) user perspective metric that measures in loose terms how much BS a user must endure in order to engage your product. Headset unresponsive, swapping audio outputs (the 3rd time), disappearing controllers, restarting, and having to remove the HMD yet again because the menu flow wasn’t clear so now you have to Google it…these are all highly factors of the BS Coefficient. When the threshold of BS is reached, a user will declare your product ‘BS!’ and disregard it. If the user’s BS Coefficient is sufficiently high enough, they may actively campaign against your product, harming your adoption rates.

Really it’s a subjective version of user frustration, as not all user’s frustrations are triggered by the same issues. In a nascent industry like modern VR, this is why it’s key to listen to user complaints of all kinds, and not disregard them as Luddites because they haven’t drunk the VR Koolaid. The typical nonsense things we accept and deal with in general computing absolutely cannot be abided in VR. Those of us that have got on the VR train early may easily forget that the numbers we need are populated by non-technical users, who are less likely to forgive issues that we simply accept as part and parcel of a new experience. We can get to ubiquitous widespread adoption, but in order to get there, we need to meet users at least halfway. There needs to be an understanding that, for many, the promise and potential of VR is not self-apparent, and the value of it needs to be relayed to them in terms and themes that are approachable and understandable. I’m cautiously reminded of Nintendo’s ‘Blue Ocean’ strategy they had for the Wii, which was to get all the non-gamers (grandmas and other traditionally non-gaming demographics) using their product. What we saw there was a dilution of the typical experience in favor of adoption, as well as over reliance on a technical gimmick, which achieved the numbers that Nintendo wanted. However once the whimsy of waggling motion controllers waned platform sales, as well as developer interest, plummeted.

We saw a similar issue arise with mobile gaming. As titles like Farmville became household names, once people caught on to how precisely designed those games are for separating you from your money, the whimsy wore upon the general public. The middling gameplay was not enough to retain users, so their entire business model was based on quick win turnover, which I don’t believe to be a good long term strategy The attempt to squeeze as much blood as you can from a tepid design results in a boring product and closed dev studios. In short, mobile gaming sold its soul before it even had a chance to develop one. Such a risk exists for VR, should devs fail to create a bevy of experiences and use cases to cater to the many types of users, and instead try to capture the entire pie all at once. Focus on what you are attempting to achieve, and don’t just do something just because it’s neat for 5 minutes. Good design is now more crucial than ever, now that people will be standing in your experience. Push the envelope of what’s capable, but make sure it’s actually accessible to your intended audience, and not just the most tech savvy among them. Ultimately, getting in, experiencing, and getting out of VR needs to be as simple to the user as making a phone call and nothing less.

The challenge is making a compelling experience that is accessible without pandering an unnecessary amount. Agency and accessibility should complement each other. The user should feel guided just enough, but never controlled. Believe that we can achieve what we dream for VR/AR. We’ve made amazing strides in the 2+ or so years that VR has once again been in the mainstream public eye, and we are on the cusp of critical mass adoption. Momentum is strong and getting stronger. Just keep studying, iterating, and applying the lessons learned. There is a humongous wealth of information and there are a fantastic amount of amazing communities who exist solely to help you and move VR forward. Find your local VR community meetup and attend. Look for a community Slack for VR. The SVVR, SFVR, and VRTK communities (shoutout to SeattleVR and VR Creators Network!) have been invaluable in helping me make contacts and advancing my knowledge and skills. And know that right now, there is room and demand for everyone so long as you keep an open and curious mind, and are willing to put the hours in.

Final Thought — A colorful individual once gave me some surprisingly sound advice that has served me well:

“You can love an idea, but never marry it.”

Congratulations to Mike and the rest of the team for their victory at AEC, and our thanks for sharing your thoughts, ideas and the core behind RhiFi with the community.

Related Article:

by Ashleigh Harris

Chief Marketing Officer

It’s been about 8 weeks since we launched High Fidelity’s new audio spaces in beta. We really appreciate all the support, particularly if you have ...

Subscribe now to be first to know what we're working on next.

By subscribing, you agree to the High Fidelity Terms of Service